Although AnimateDiff can provide a model algorithm for the flow of animation, the issue of variability in the produced images due to Stable Diffusion has led to significant problems such as video flickering or inconsistency. With the current tools, the combination of IPAdapter and ControlNet OpenPose conveniently addresses this issue.

Preparatory Work

You can directly use my workflow for testing, and for installation reference, you can check my previous article [ComfyUI] AnimateDiff Image Workflow.

Additionally, in this workflow, the tool FreeU is utilized, and I highly recommend everyone to install it.

ComfyUI itself has a built-in node for FreeU that you can use!

Additionally, IPAdapter needs to be installed separately. The author has two versions available, and both can be used, although the older version will not receive further updates.

Before using IPAdapter, please download the relevant model files.

For SD1.5, you will need the following files:

- ip-adapter_sd15.bin

- ip-adapter_sd15_light.bin - Use this model when your prompt is more important than the input reference image.

- ip-adapter-plus_sd15.bin - Use this model when you want to reference the overall style.

- ip-adapter-plus-face_sd15.bin - Use this model when you only want to reference the face.

For SDXL, you will need the following files:

- ip-adapter_sdxl.bin

- ip-adapter_sdxl_vit-h.bin - Although using the base model of SDXL, you will still need the SD1.5 text encoder when using this model.

- ip-adapter-plus_sdxl_vit-h.bin - Same as above.

- ip-adapter-plus-face_sdxl_vit-h.bin - Same as above, but this one is for face reference only.

Place these files in either custom_nodes/ComfyUI_IPAdapter_plus/models or custom_nodes/IPAdapter-ComfyUI/models.

Additionally, as CLIP tool is required, please place the following two files in ComfyUI/models/clip_vision/. You can rename the files for distinction between SD1.5 and SDXL.

- SD1.5 model - This file is needed for all SDXL models with the suffix vit-h.

- SDXL model

AnimateDiff + IPAdapter

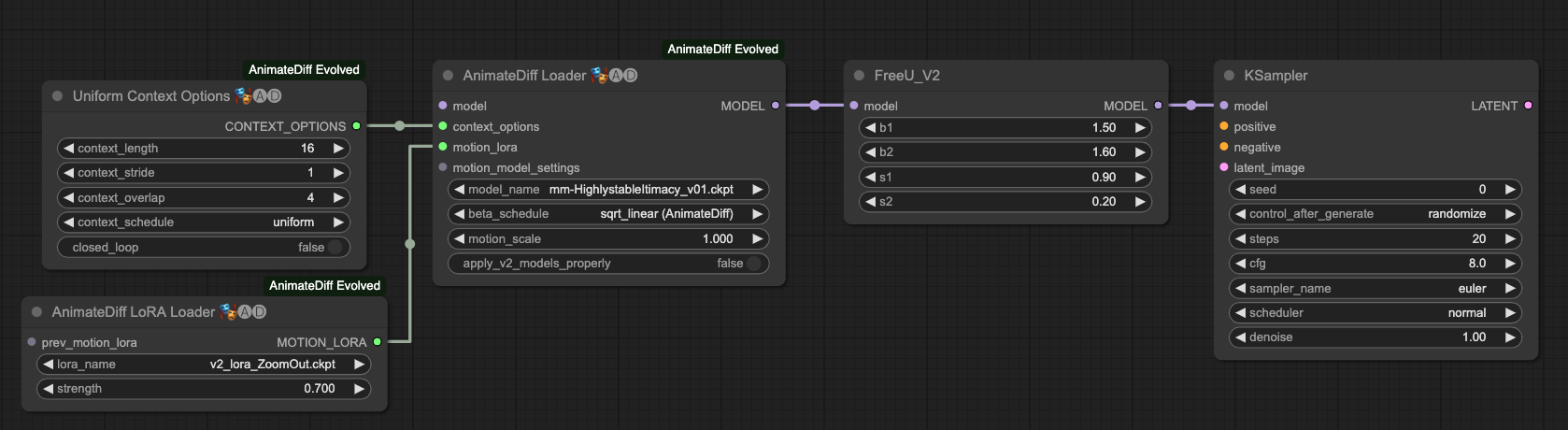

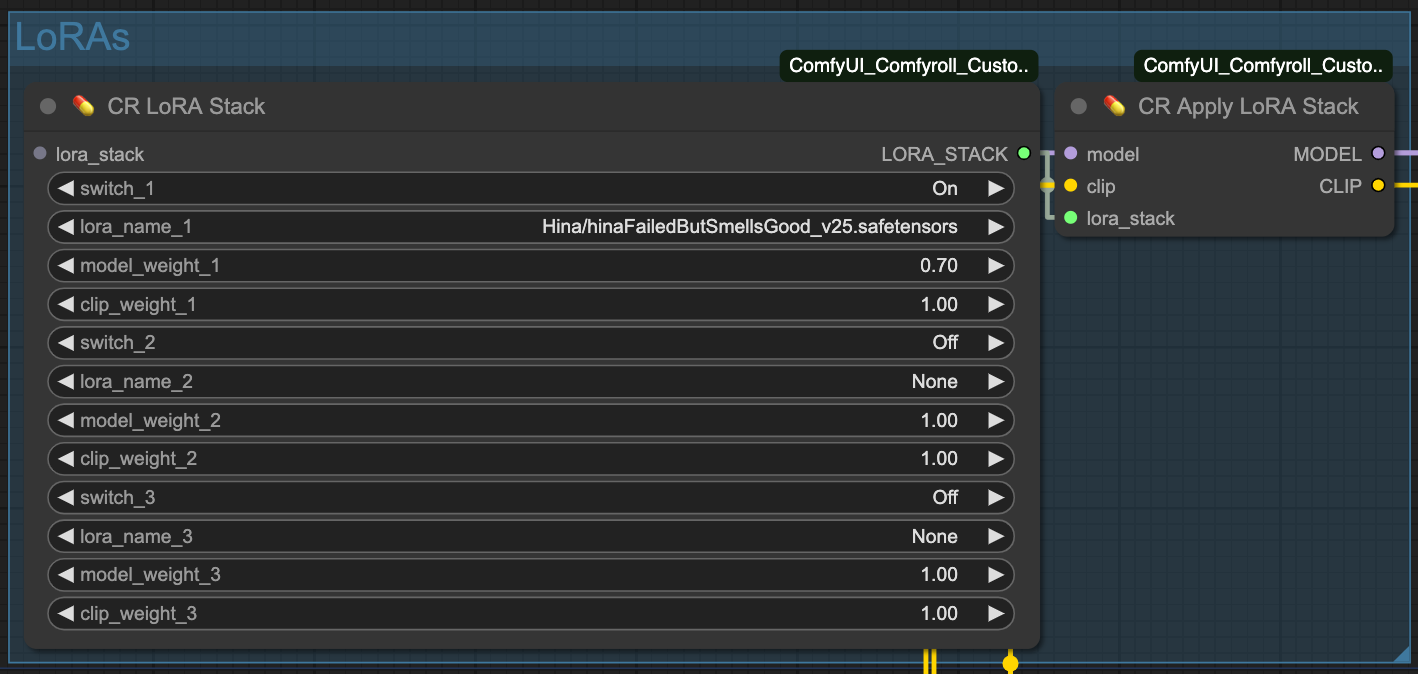

Let's first look at the general configuration for AnimateDiff.

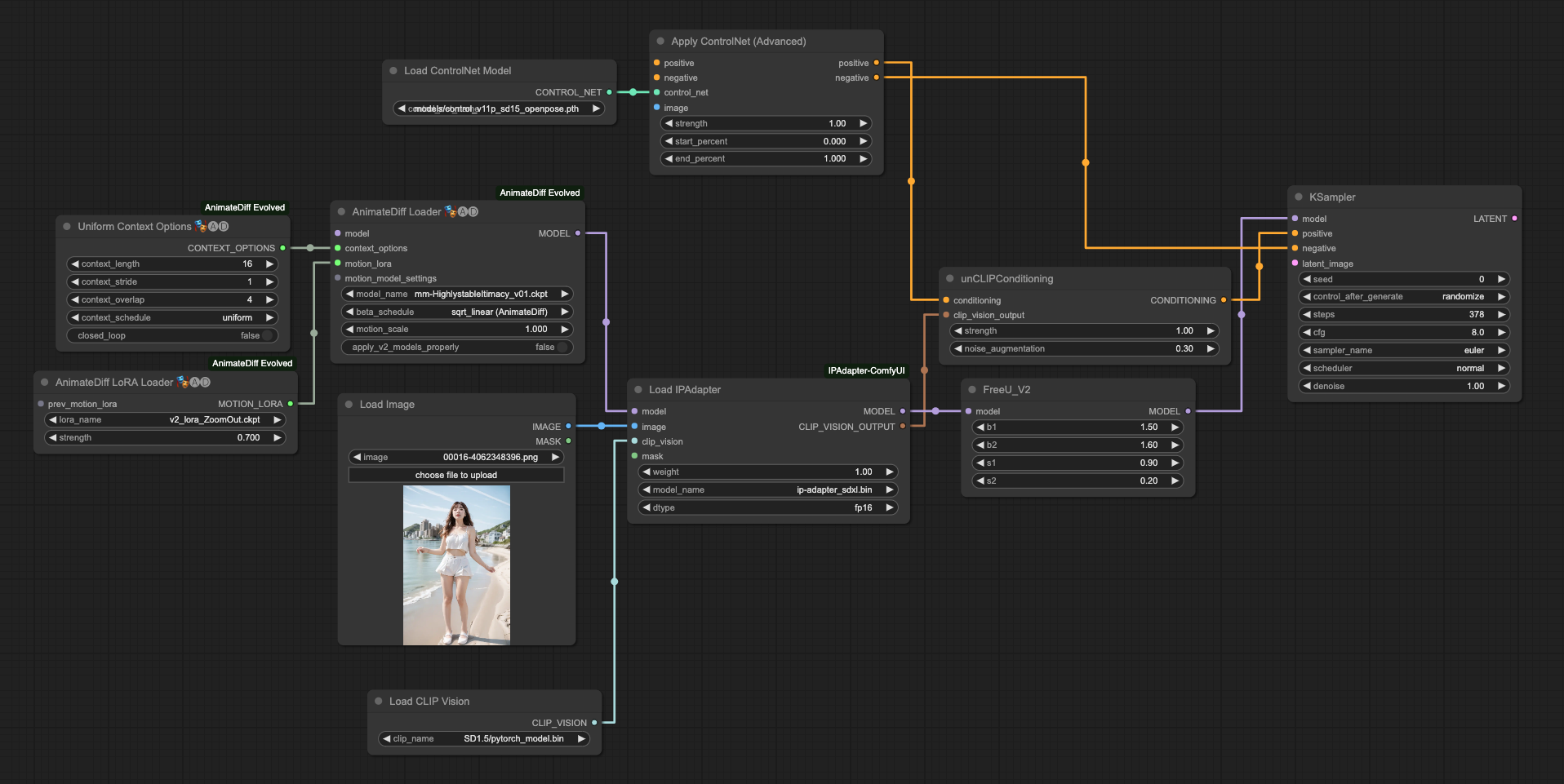

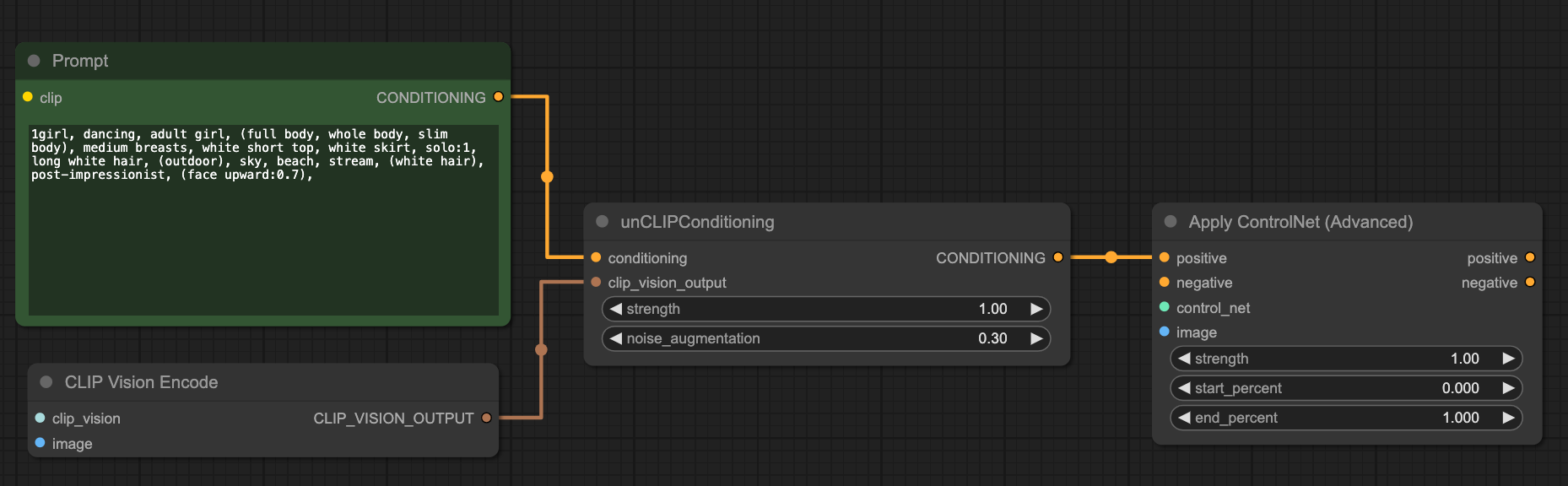

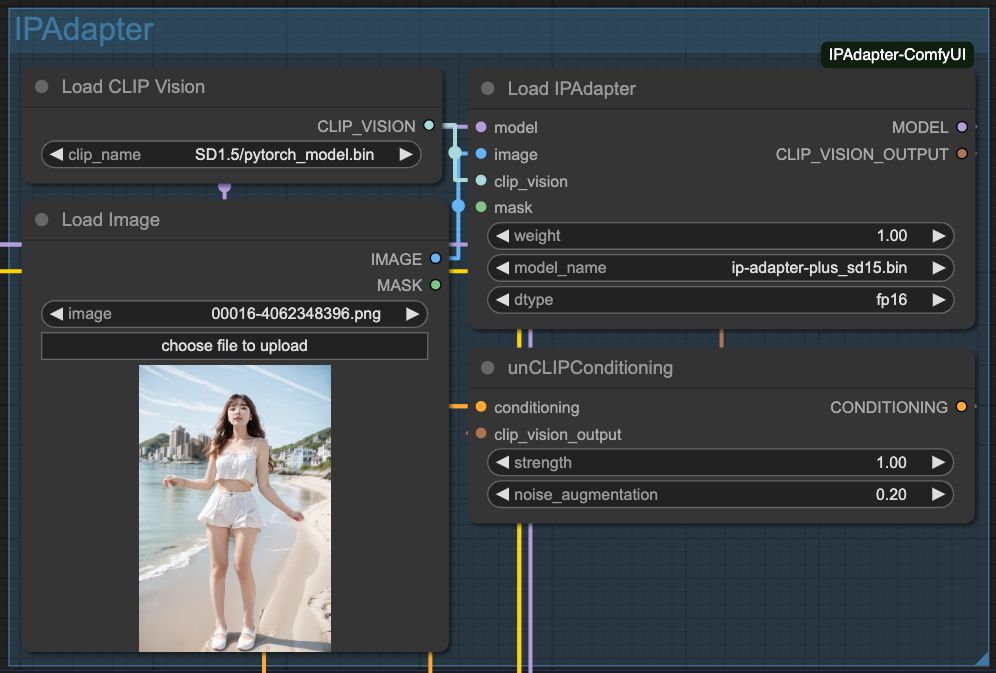

Since we are using the IPAdapter in this part, we need to find a place to incorporate it. Please note! The example here uses the version IPAdapter-ComfyUI, but you can also replace it with ComfyUI IPAdapter plus if you prefer.

The following outlines the process of connecting IPAdapter with ControlNet:

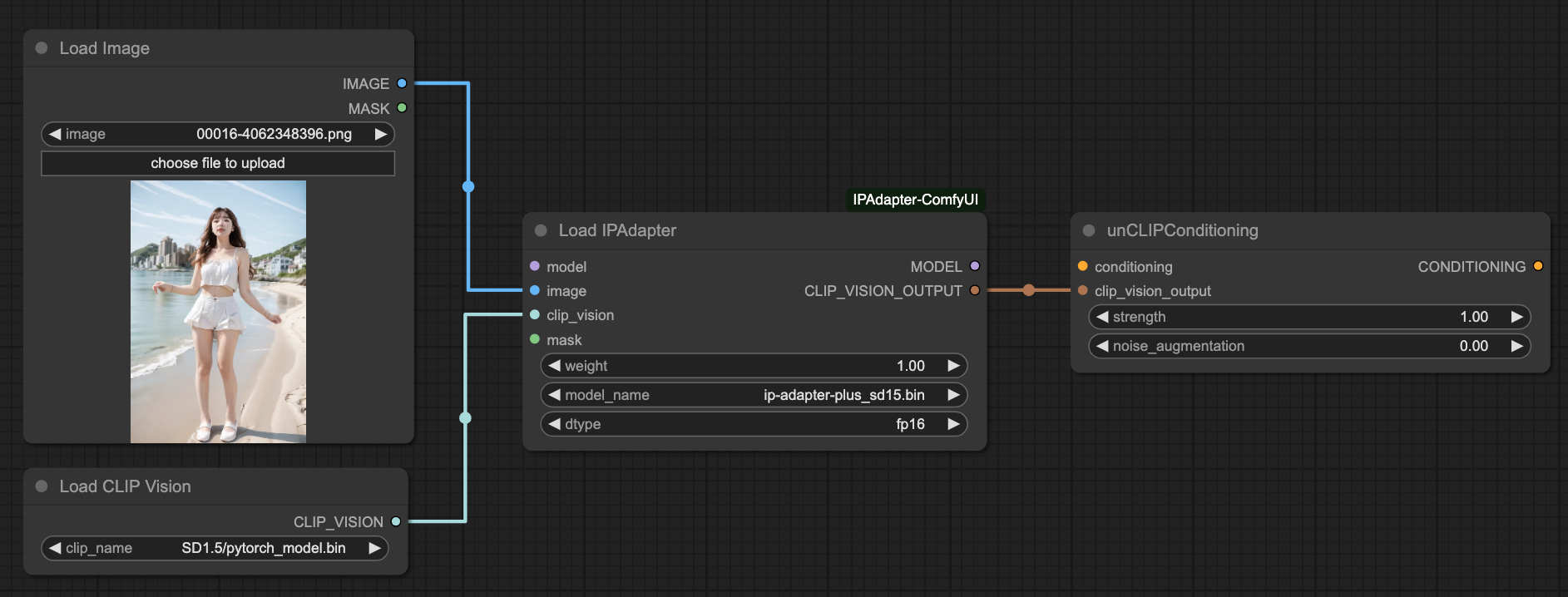

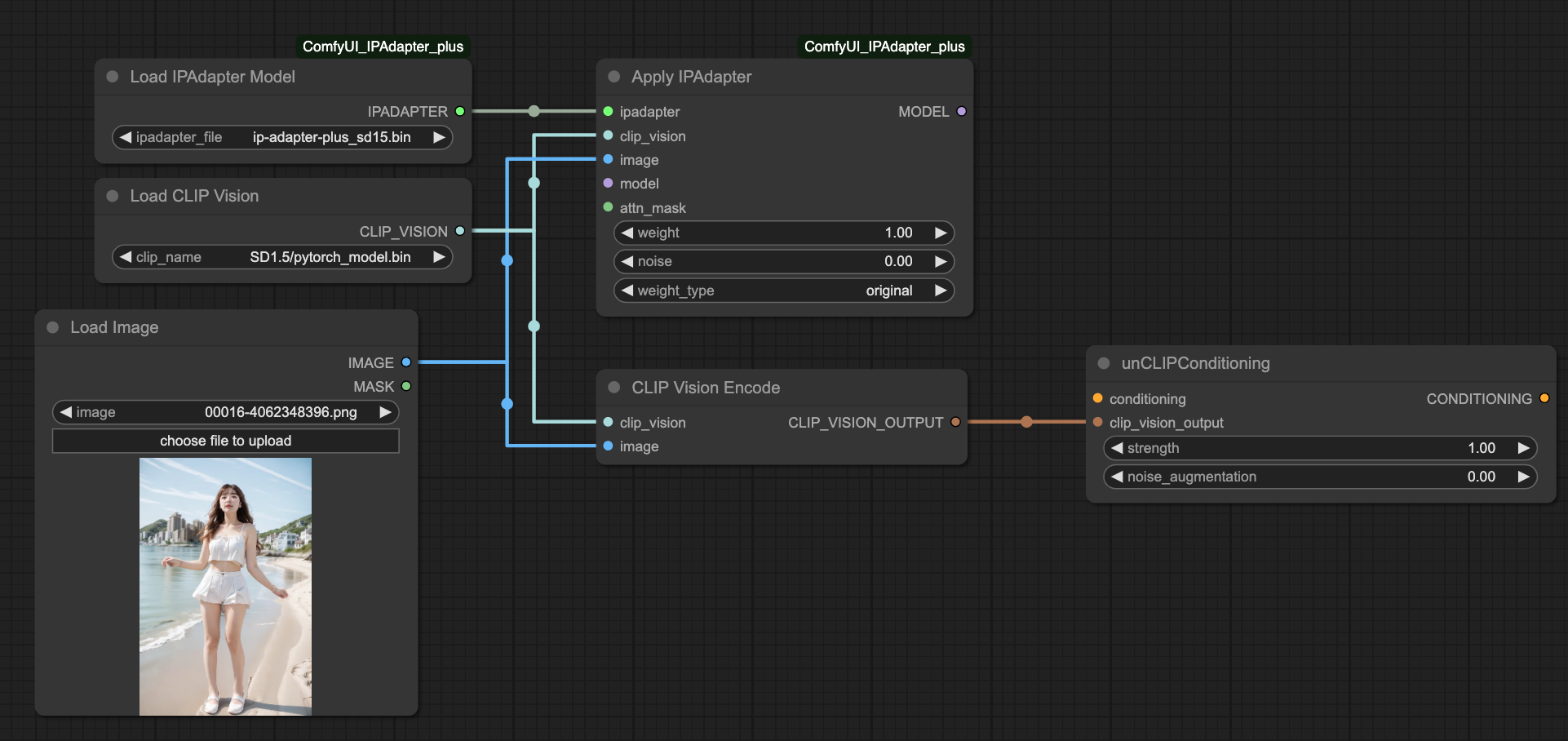

The connection for both IPAdapter instances is similar. Here are two reference examples for your comparison:

IPAdapter-ComfyUI

ComfyUI IPAdapter Plus

This should give you a general understanding of how to connect AnimateDiff with IPAdapter.

ControlNet + IPAdapter

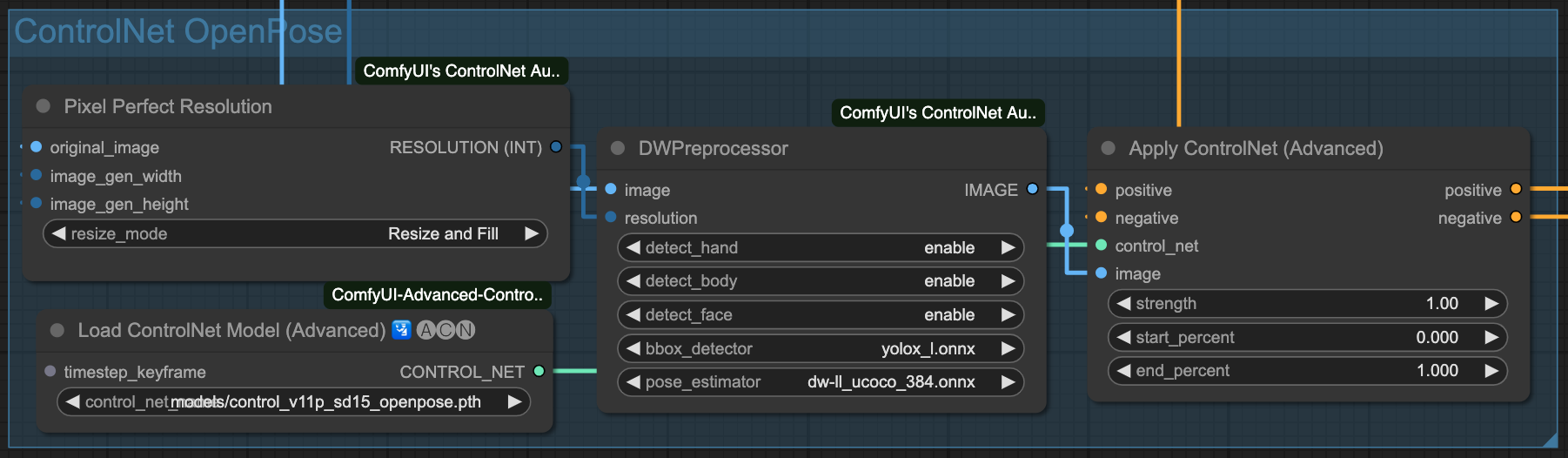

Next, we need a ControlNet from OpenPose to control the input from IPAdapter, aiming for better output.

If your image input source is originally a skeleton image, then you don't need the DWPreprocessor preprocessor. However, since my input source is directly a video file, I leave it to the preprocessor to handle the images for me.

For the positive prompt in the unCLIPConditioning of IPAdapter, it needs to be connected to ControlNet. Below is a schematic flow for reference, so that our IPAdapter can be controlled by ControlNet.

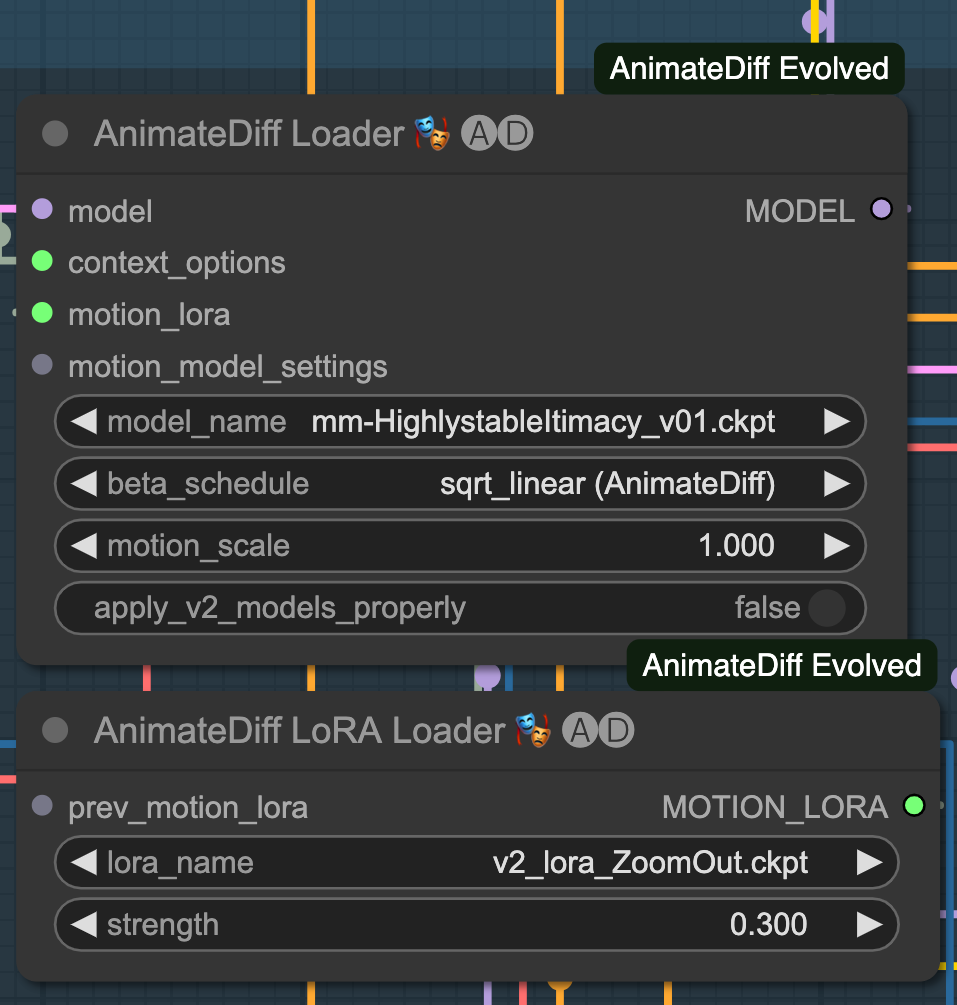

AnimateDiff Motion Lora

For a smoother animation presentation, I use Motion Lora to add a subtle gradient effect. It's optional, and you can choose not to use it if you prefer.

I have chosen the effect v2_lora_ZoomOut.ckpt here, and you can try other dynamic Lora effects on your own. Since I don't want it to have too much impact on the visuals, I only give it a weight of 0.3.

In practical testing, if your overall movement is quite complex, it is recommended not to use it.

Compilation Process

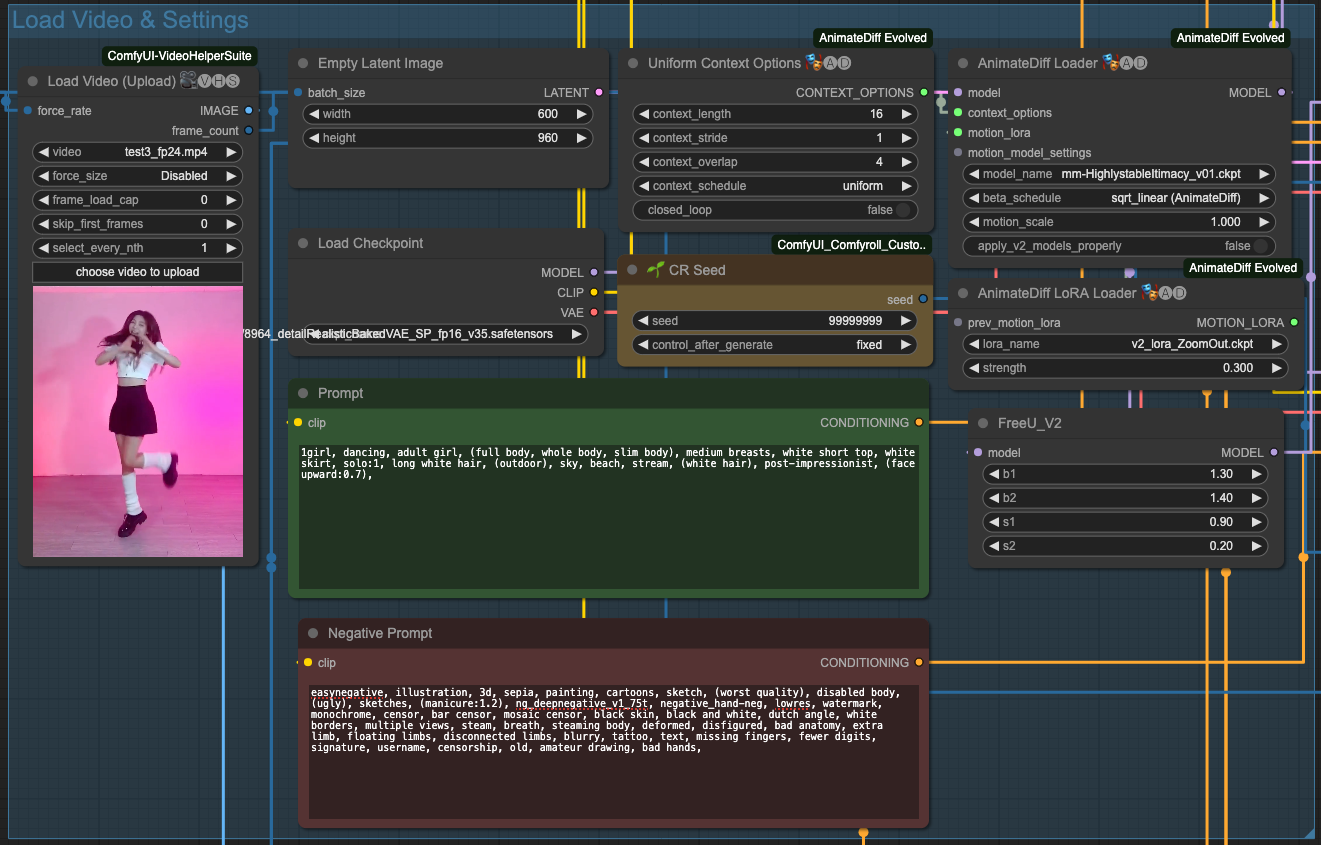

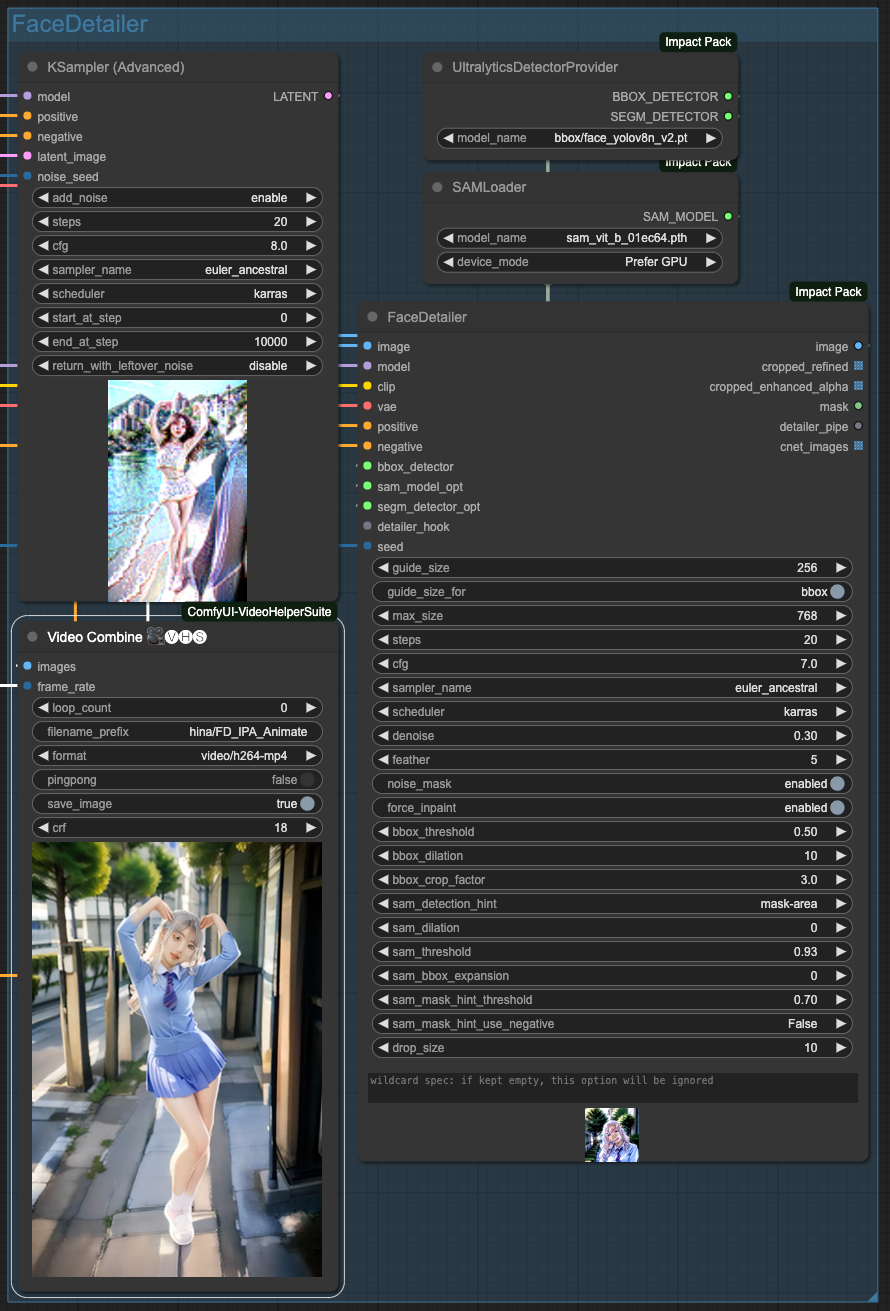

Finally, let's combine these processes:

- Load the video, models, and prompts, and set up the AnimateDiff Loader.

- Configure IPAdapter.

- Set up ControlNet.

- Configure Lora; if you don't want to use it, you can

ByPassit. - Set up the final output and refine the face.

Finally, we proceed with the output and face refinement.

Conclusion

The reason for using only OpenPose here is that we have used IPAdapter to reference the overall style. Therefore, adding ControlNet like SoftEdge or Lineart might interfere with the reference results of the entire IPAdapter.

Of course, this situation is not guaranteed to happen. If your original image source is not very complex, using one or two ControlNet can still achieve good results.

![[ComfyUI] Stable imagery through IPAdapter + OpenPose + AnimateDiff](/content/images/size/w960/2024/01/00050-3562496567.png)

![[LoRA Guide] Z Image Turbo Training with AI-Toolkit and Merging It!](/content/images/size/w960/2025/12/LN_845764713767329_00001_.jpg)

![[Lora] Z Image Turbo 與 AI-Toolkit](/content/images/size/w960/2025/12/hina_zImageTurbo_caline_v3-dream-1.jpg)

![[AI] 與 AI Agent 一起開發優化器](/content/images/size/w960/2025/07/00059-293292005.jpg)